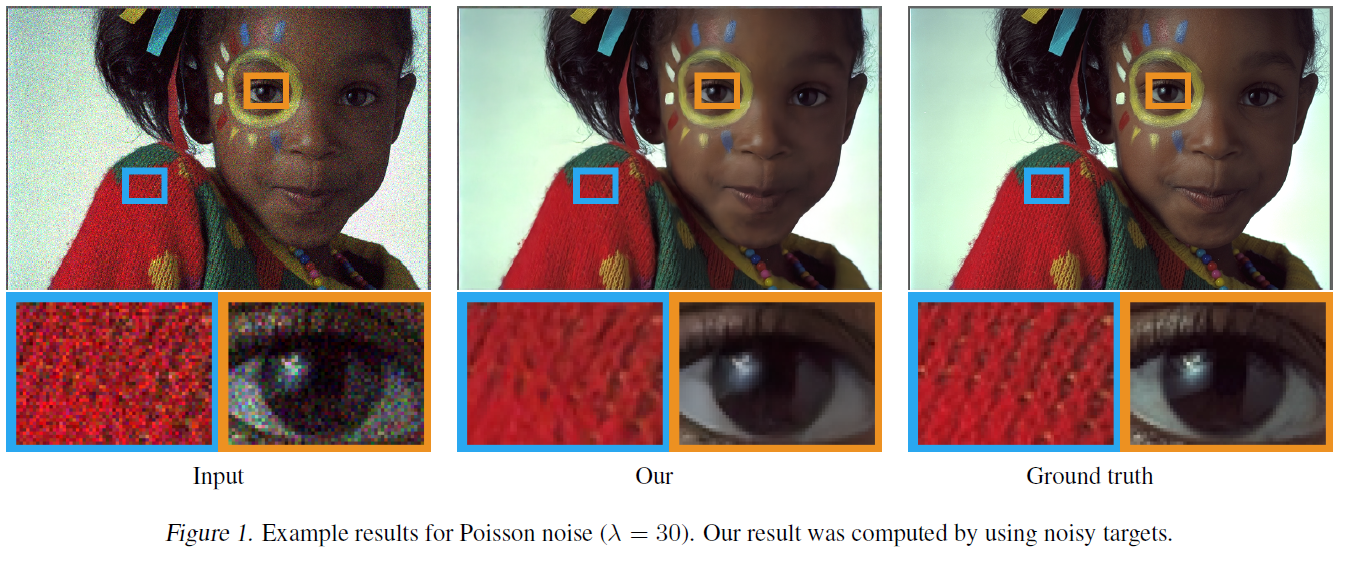

NVidia has announced impressive progress in using AI to remove noise from “grainy” images without access to a clean version of the image to learn from.

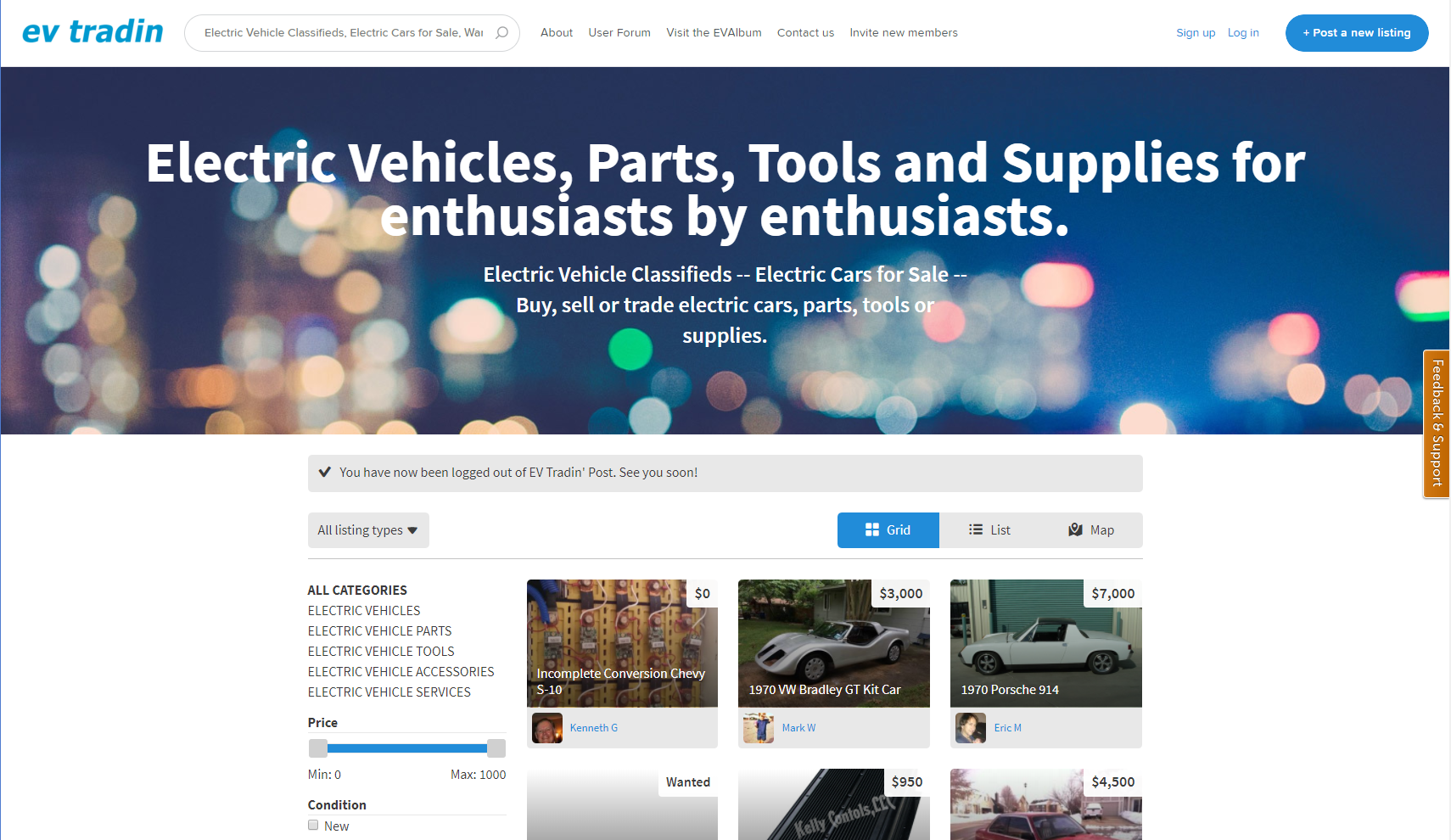

By noise – they tend to refer to the grainy result of a low light digital photo, a side benefit being that they can also easily remove textual noise. Currently, the result is “softer” than the original clean image, but I’m curious whether it will end up causing issues with watermarking or other copy protection schemes. At what point will “good enough” be sufficient for a derivative use when we deal in low resolution imagery on the web all the time?

Many of us in collections rely on the use of watermarks to make openly sharing our collections more palatable to our donors. Already, we have to warn them that there is no low barrier way to really prevent unattributed image reuse… This is simply going to make that conversation even more difficult.